Title: Robotic motion coordination based on a geometric deformation measure

Authors: Miguel Aranda, Jose Sanchez, Juan Antonio Corrales Ramon and Youcef Mezouar

Journal: IEEE Systems Journal, doi: 10.1109/JSYST.2021.3107779

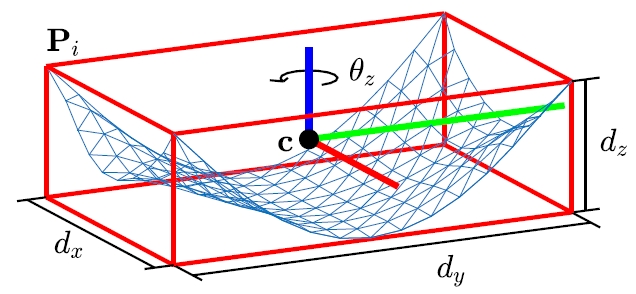

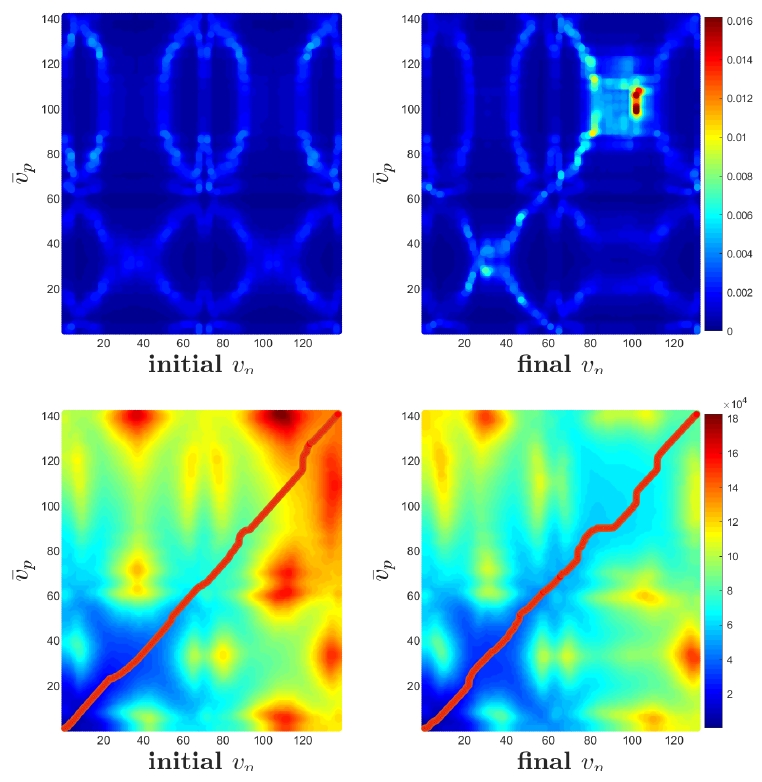

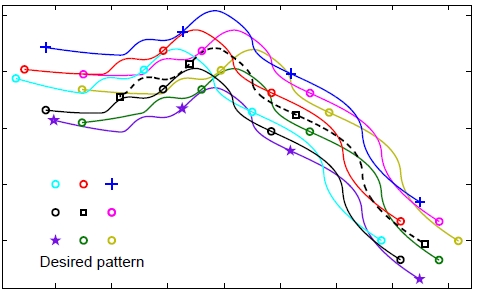

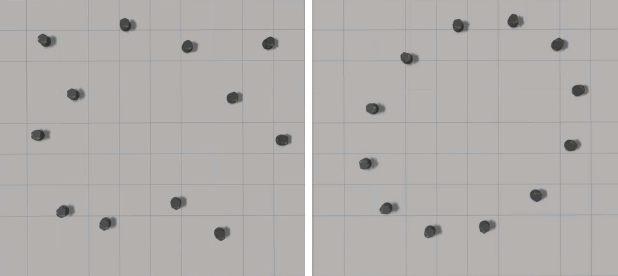

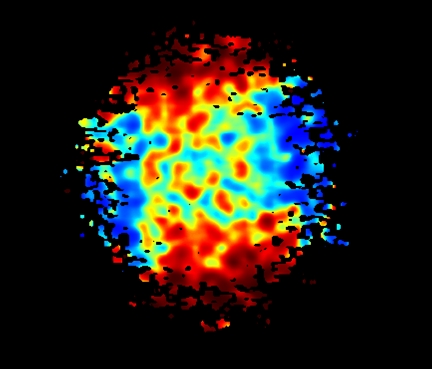

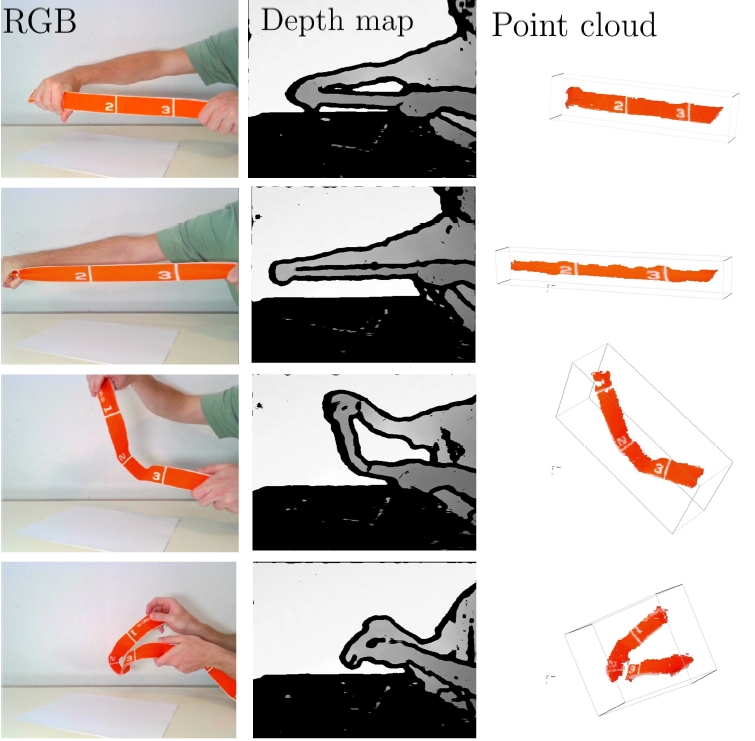

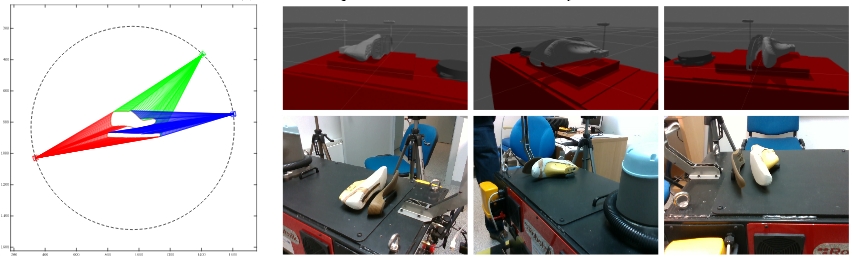

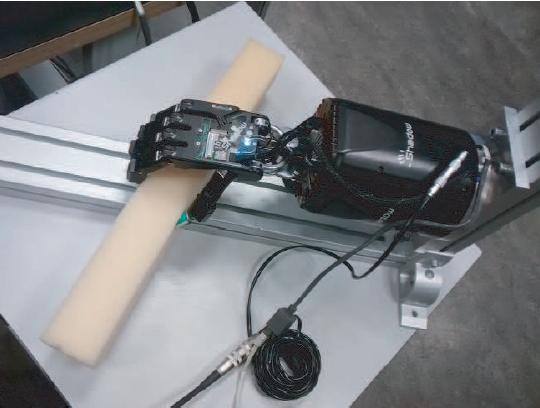

Abstract: This article describes a novel approach to achieve motion coordination in a multirobot system based on the concept of deformation. Our main novel contribution is to link these two elements (namely, coordination and deformation). In particular, the core idea of our approach is that the robots’ motions minimize a global measure of the deformation of their positions relative to a prescribed shape. Based on this idea we propose a linear shape controller, that also incorporates a term modeling an affine deformation. We show that the affine term is particularly useful when the deformation to be controlled is large. We also propose controls for the other variables (centroid, rotation, size) that define the geometric configuration of the team. Importantly, these additional controls are completely decoupled from the shape control. The overall approach is simple and robust, and it creates closely coordinated robot motions. Being based on deformation, it is useful in several scenarios involving manipulation tasks: e.g., handling of a highly deformable object, control of an object’s shape, or regulation of the shape formed by the fingertips of a robotic hand. We present simulation and experimental results to validate the proposed approach.