The final event of the Interreg Sudoe Project COMMANDIA was successfully held last September 30th as an online event. A summary of the project was presented by the coordinator of the project, Youcef Mezouar. After the introduction and summary of the project, the event continued with the presentations from the different partners of the consortium with special emphasis on technical, scientific, dissemination activities and achievements. To conclude, a round table with open live discussion was held with the participants in the event.

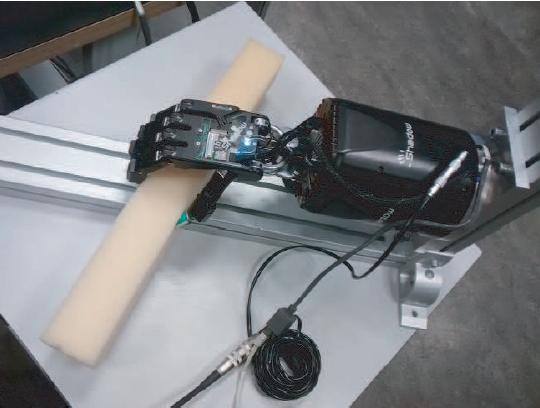

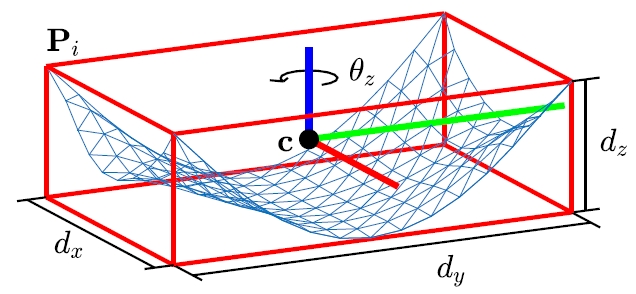

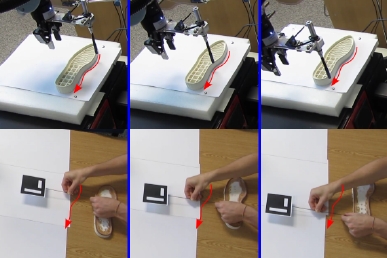

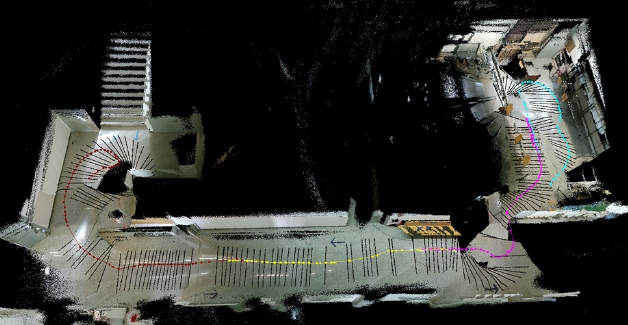

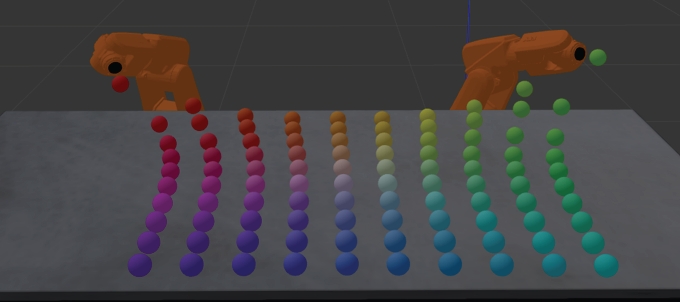

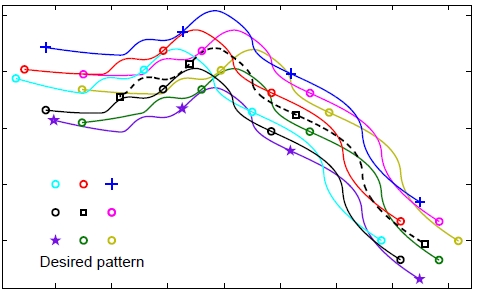

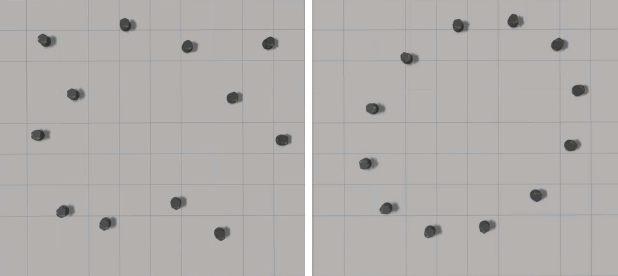

You can find a video with that motivates Project COMMANDIA in the following link . Another video here gives an introduction and overview of the project goals. One of the results of the project is a demonstrator that implements some of the techniques developed within the project to manipulate deformable objects. This is explained in this video .

During the COMMANDIA final event we had the opportunity to learn about the work that five partners (SIGMA, U. Alicante, INESCOP, U. Zaragoza, U. Coimbra) developed across three countries (Portugal, Spain, France) on the topic of Collaborative Robotic Mobile Manipulation of Deformable Objects in Industrial Applications. We would also like to take this opportunity to thank all those involved in the success of this project.