Miguel Aranda presented at ICRA2019 the work entitled “Deformation-Based Shape Control with a Multirobot System”, which was coauthored by Juan Antonio Corrales and Youcef Mezouar. The conference was held on May 20-24, 2019 Montreal, Canada.

Abstract: We present a novel method to control the relative positions of the members of a robotic team. The application scenario we consider is the cooperative manipulation of a deformable object in 2D space. A typical goal in this kind of scenario is to minimize the deformation of the object with respect to a desired state. Our contribution, then, is to use a global measure of deformation directly in the feedback loop. In particular, the robot motions are based on the descent along the gradient of a metric that expresses the difference between the team’s current configuration and its desired shape. Crucially, the resulting multirobot controller has a simple expression and is inexpensive to compute, and the approach lends itself to analysis of both the transient and asymptotic dynamics of the system. This analysis reveals a number of properties that are interesting for a manipulation task: fundamental geometric parameters of the team (size, orientation, centroid, and distances between robots) can be suitably steered or bounded. We describe different policies within the proposed deformation-based control framework that produce useful team behaviors. We illustrate the methodology with computer simulations.

Download paper

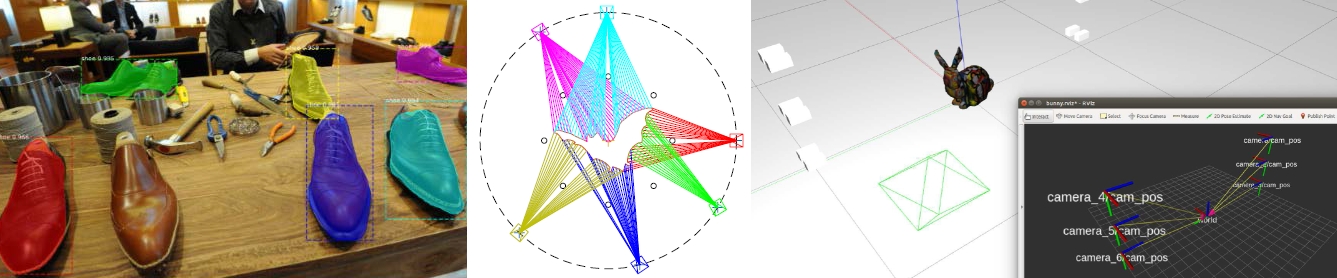

Let us introduce our mobile robotic platform Campero. This is a mobile manipulator prototype developed in the framework of project COMMANDIA. The main goal is the definition, design and implementation of integrated functionalities in robotic platforms that extend the capabilities of robotic systems for the manipulation of deformable objects in the context of industrial production. With this platform, we will provide a laboratory prototype of a multi-sensorial multi-robot with manipulation and ground locomotion capabilities, increasing precision in complex autonomous manipulation tasks of deformable objects.

Let us introduce our mobile robotic platform Campero. This is a mobile manipulator prototype developed in the framework of project COMMANDIA. The main goal is the definition, design and implementation of integrated functionalities in robotic platforms that extend the capabilities of robotic systems for the manipulation of deformable objects in the context of industrial production. With this platform, we will provide a laboratory prototype of a multi-sensorial multi-robot with manipulation and ground locomotion capabilities, increasing precision in complex autonomous manipulation tasks of deformable objects.