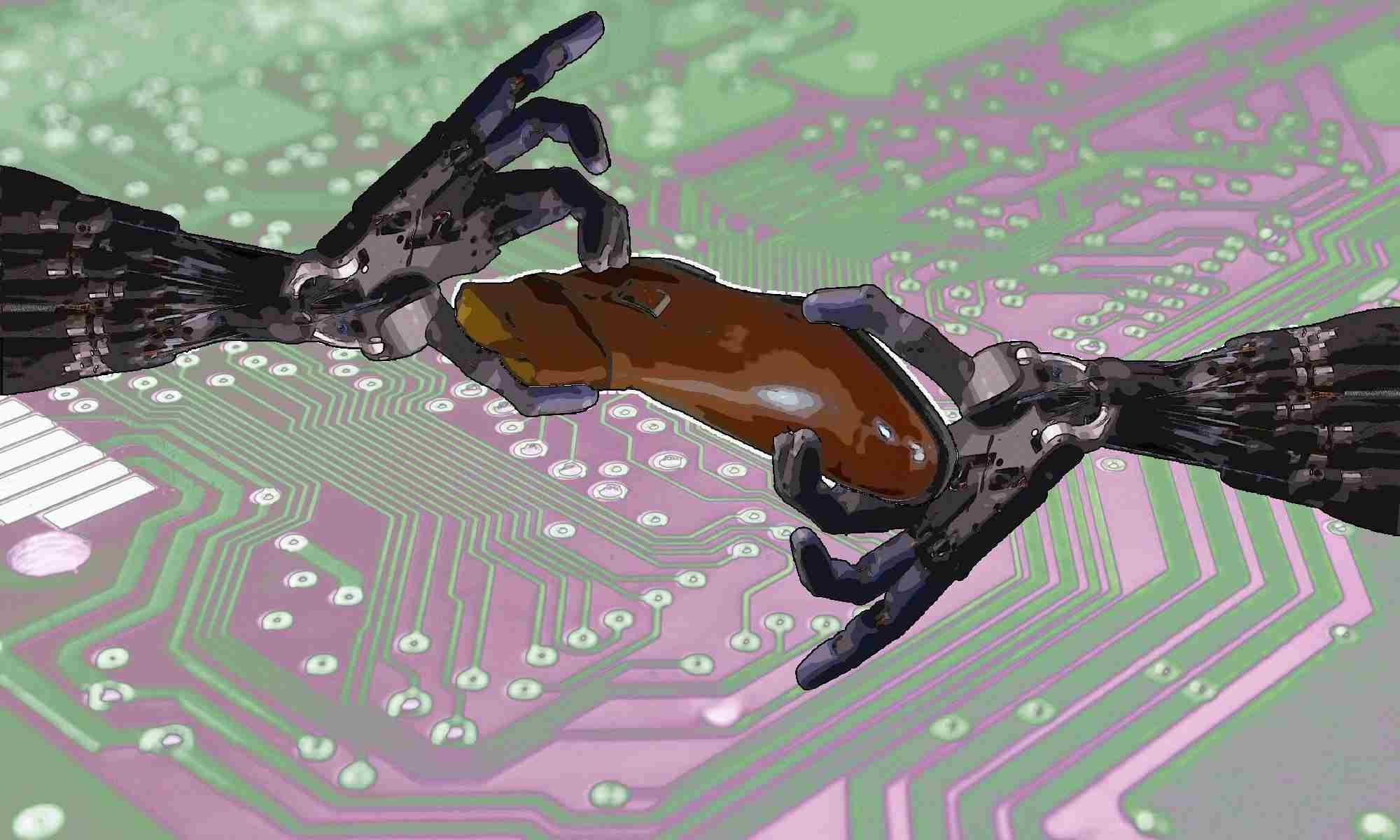

Title: Agarre bimanual de objetos asistido por visión

Author: J.A. Castro-Vargas, B.S. Zapata-Impata, P. Gil, J. Pomares

Conference: Tejado Balsera, Inés, et al. (eds.). Actas de las XXXIX Jornadas de Automática: Badajoz, 5-7 de Septiembre de 2018. ISBN 978-84-09-04460-3, pp. 1030-1037

Abstract: Manipulation tasks of objects, sometimes, require the use of two or more cooperating robots. In the industry 4.0, assistance robotic is being more and more demanded, for example, to carry out tasks such as lifting, dragging or pushing of both heavy and big packages. Consequently, it is possible to find robots with human appearance addressed on helping human operators in activities in which these types of movements occur. In this article, a vision-assisted robotic platform is presented to carry out both grasping tasks and bimanual manipulation of objects. The robotic platform consists of a metallic torso with rotational joint at the hip and two industrial manipulators, with 7 degrees of freedom, which act as arms. Each arm mounts a multifinger robotic hand at the end. Each of the upper extremities use visual perception from 3 RGBD sensors located in an eye-to-hand configuration. The platform has been successfully used and tested to carry out bimanual object grasping in order to develop cooperative manipulation tasks in a coordinated way between both robotic extremities.

Download paper

Leaflet

Paper: A Vision-Driven Collaborative Robotic Grasping System Tele-Operated by Surface Electromyography

Title: A Vision-Driven Collaborative Robotic Grasping System Tele-Operated by Surface Electromyography

Author: Andrés Úbeda, Brayan S. Zapata-Impata, Santiago T. Puente, Pablo Gil, Francisco Candelas and Fernando Torres

Journal: Sensors 2018, 18(7), 2366; https://doi.org/10.3390/s18072366

Abstract: This paper presents a system that combines computer vision and surface electromyography techniques to perform grasping tasks with a robotic hand. In order to achieve a reliable grasping action, the vision-driven system is used to compute pre-grasping poses of the robotic system based on the analysis of tridimensional object features. Then, the human operator can correct the pre-grasping pose of the robot using surface electromyographic signals from the forearm during wrist flexion and extension. Weak wrist flexions and extensions allow a fine adjustment of the robotic system to grasp the object and finally, when the operator considers that the grasping position is optimal, a strong flexion is performed to initiate the grasping of the object. The system has been tested with several subjects to check its performance showing a grasping accuracy of around 95% of the attempted grasps which increases in more than a 13% the grasping accuracy of previous experiments in which electromyographic control was not implemented

Download paper

Conference: The digital challenge in the footwear sector. INESCOP- Economía3

D. Miguel Davia, Head of Advanced Manufacturing at INESCOP, presented in the forum “El reto digital en el Sector Calzado”, on 7th March 2018, the keynote “Servicios de apoyo desde Inescop a la digitalización del Sector Calzado” (“Support services from Inescop to the digitization of the Footwear Sector”).

COMMANDIA in Diario INFORMACIÓN

COMMANDIA in elEconomista.es

COMMANDIA in Heraldo de Aragón

The newspaper Heraldo de Aragón dedicates a page to project COMMANDIA.

Advertencia

Esto es contenido administrativo y se requiere iniciar sesión para continuar.

COMMANDIA presentation video

A brief video introducing project COMMANDIA (Download).

Warning

This is administrative content and log in is required to proceed.