All the partners of the SUDOE project COMMANDIA have meeting today on July 6, 2021 to present and discuss their latest advances.

All the partners of the SUDOE project COMMANDIA have meeting today on July 6, 2021 to present and discuss their latest advances.

There is a growing interest in the robotics community to investigate the handling of deformable objects. The ability to interact with deformable objects promises new applications for robots: cable assembly in industrial settings, doing laundry in households, dressing assistance in elderly care, organs and tissues manipulation in surgical operations, or fragile samples collection in underwater/space robotics, to name a few. However, deformable objects are considerably more complex to deal with than rigid ones. Specifically, some of the new challenges involved in handling object deformation are the following:

As a result, there is a necessity for novel methodological and technological approaches in this field, and these advances need to cover the full spectrum of robotic problems and tasks (perception, modeling, planning, and control).

Therefore, the aim of this special issue is to collect the latest research results that handle deformable objects in various robotic applications.

Topics of interest for this special issue include and are not limited to:

The special issue will follow the following timeline:

23 June 2021 Call for Papers

23 Sept 2021 Papercept open for submission8 Oct 2021 Submission deadline (Extended to 22 October)

2 Jan 2022 Authors receive RA-L reviews and recommendation

16 Jan 2022 Authors of accepted MS submit final RA-L version

1 Feb 2022 Authors of R&R MS resubmit revised MS

8 Mar 2022 Authors receive final RA-L decision

22 Mar 2022 Authors submit final RA-L files

27 Mar 2022 Camera ready version appears in RA-L on Xplore

6 April 2022 Final Publication

Jihong Zhu

(TU Delft/Honda Research Institute Europe, Netherlands/Germany)

Claire Dune

(Laboratoire COSMER – EA 7398, Université de Toulon, France)

Miguel Aranda

(CNRS, Clermont Auvergne INP, Institut Pascal, Université Clermont Auvergne, France)

Youcef Mezouar

(CNRS, Clermont Auvergne INP, Institut Pascal, Université Clermont Auvergne, France)

Juan Antonio Corrales

(University of Santiago de Compostela, Spain)

Pablo Gil

(University of Alicante, Spain)

Gonzalo López-Nicolás

(University of Zaragoza, Spain)

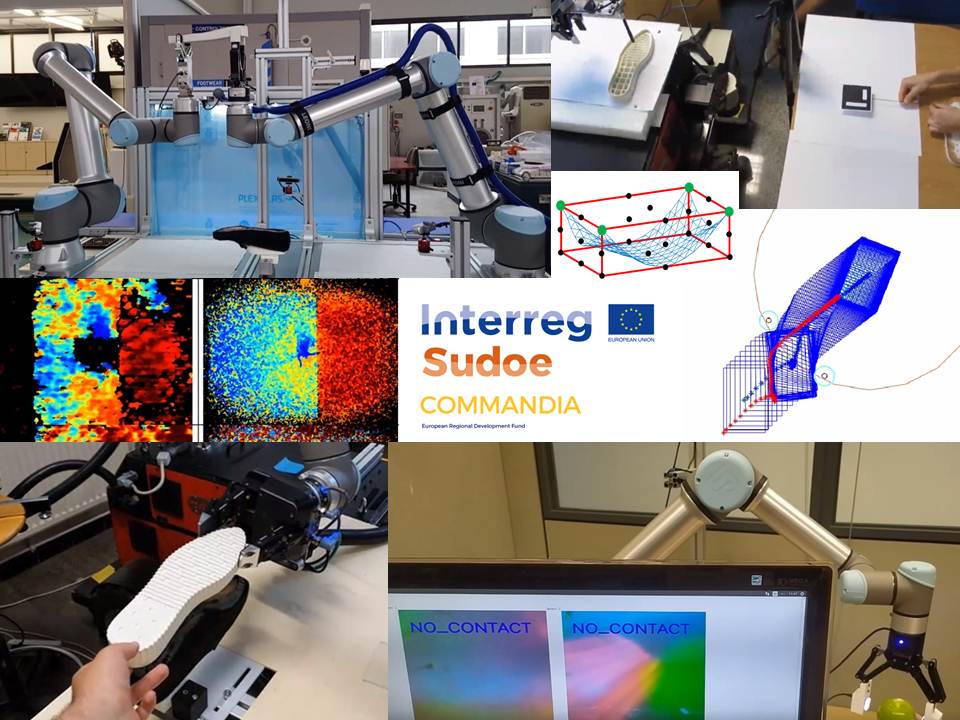

People from INESCOP (José F. Gómez, José M. Gutiérrez, Jesús Arregui y Maria D. Fabregat) have published the article “Robots para el ensamblado de calzado” in the journal “Revista del Calzado”.

Title: Towards footwear manufacturing 4.0: shoe sole robotic grasping in assembling operations

Author: Guillermo Oliver, Pablo Gil, Jose F. Gomez, Fernando Torres

Journal: The International Journal of Advanced Manufacturing Technology, 2021

Abstract: In this paper, we present a robotic workcell for task automation in footwear manufacturing such as sole digitization, glue dispensing, and sole manipulation from different places within the factory plant. We aim to make progress towards shoe industry 4.0. To achieve it, we have implemented a novel sole grasping method, compatible with soles of different shapes, sizes, and materials, by exploiting the particular characteristics of these objects. Our proposal is able to work well with low density point clouds from a single RGBD camera and also with dense point clouds obtained from a laser scanner digitizer. The method computes antipodal grasping points from visual data in both cases and it does not require a previous recognition of sole. It relies on sole contour extraction using concave hulls and measuring the curvature on contour areas. Our method was tested both in a simulated environment and in real conditions of manufacturing at INESCOP facilities, processing 20 soles with different sizes and characteristics. Grasps were performed in two different configurations, obtaining an average score of 97.5% of successful real grasps for soles without heel made with materials of low or medium flexibility. In both cases, the grasping method was tested without carrying out tactile control throughout the task.

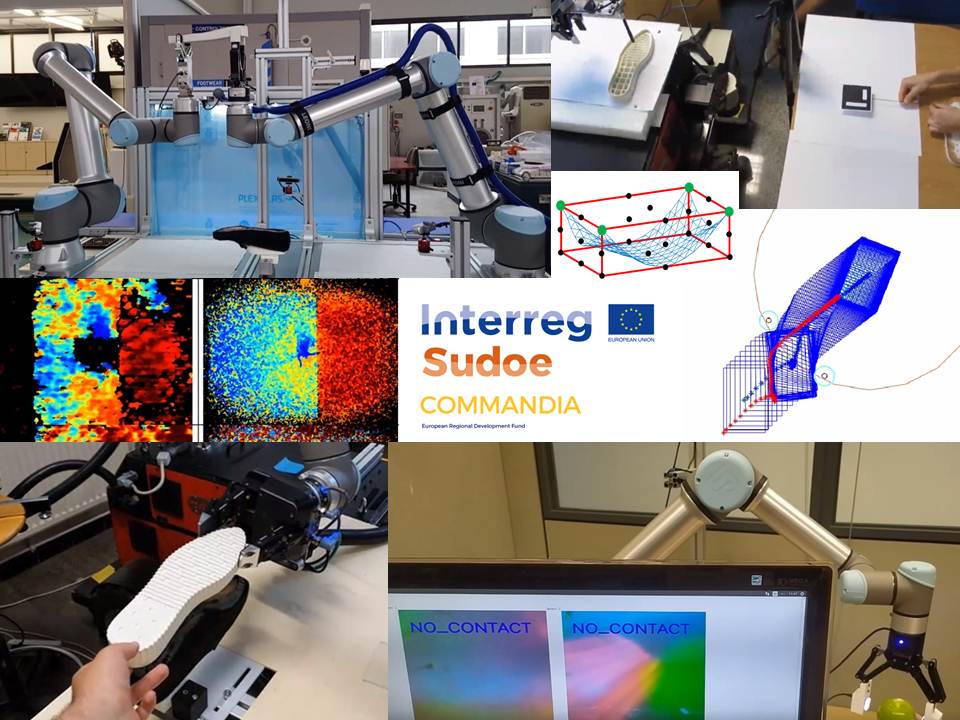

“Simulation of soft bodies in ROS and Gazebo” by Nicolás Iván Sanjuán Tejedor

The main objective of this work was to deepen the modeling of deformable objects in the field of robotics. As a result, in this work a simulation of a cloth-like deformable object has been carried out in the 3D robotics simulator Gazebo and the ROS (Robot Operating System) working environment by developing specific plugins.

Title: 3D reconstruction of deformable objects from RGB-D cameras: an omnidirectional inward-facing multi-camera system

Authors: Eva Curto, Helder Araujo

Conference: 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP’2021)

Abstract: This is a paper describing a system made up of several inward-facing cameras able to perform reconstruction of deformable objects through synchronous acquisition of RGBD data. The configuration of the camera system allows the acquisition of 3D omnidirectional images of the objects. The paper describes the structure of the system as well as an approach for the extrinsic calibration, which allows the estimation of the coordinate transformations between the cameras. Reconstruction results are also presented.

Download paper

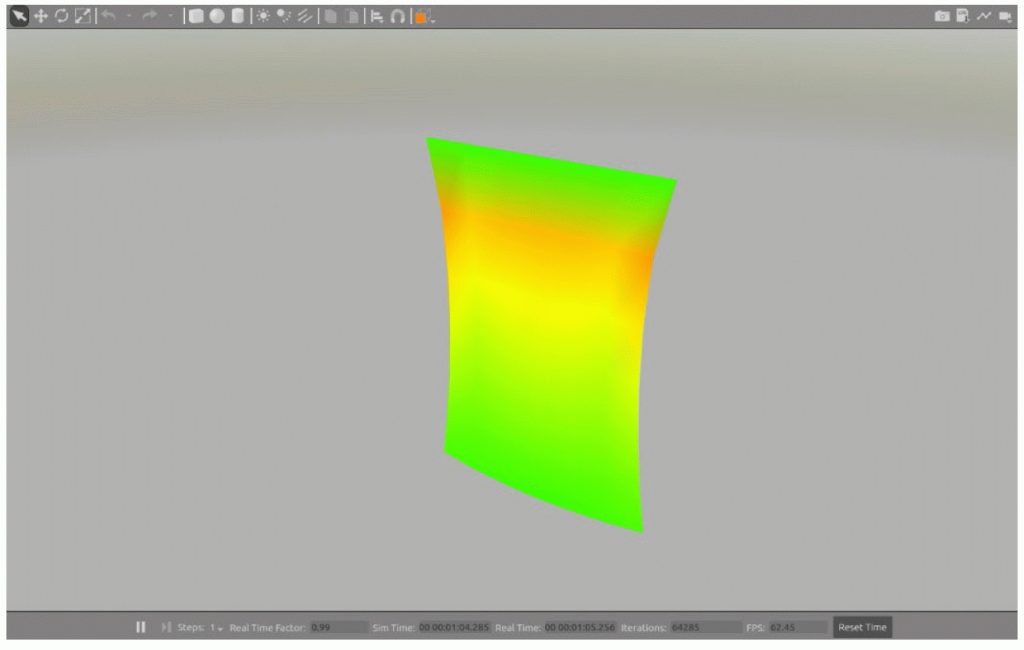

Title: Intel RealSense SR305, D415 and L515: Experimental evaluation and comparison of depth estimation

Authors: Francisco Lourenco, Helder Araujo

Conference: 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP’2021)

Abstract: In the last few years Intel has launched several low cost RGB-D cameras. Three of these cameras are the SR305, the L415 and the L515. These three cameras are based on different operating principles. The SR305 is based on structured light projection, the D415 is based on stereo based using also the projection of random dots and the L515 is based on LIDAR. In addition they all provide RGB images. In this paper we perform and experimental analysis and comparison of the depth estimation by the three cameras.

Download paper

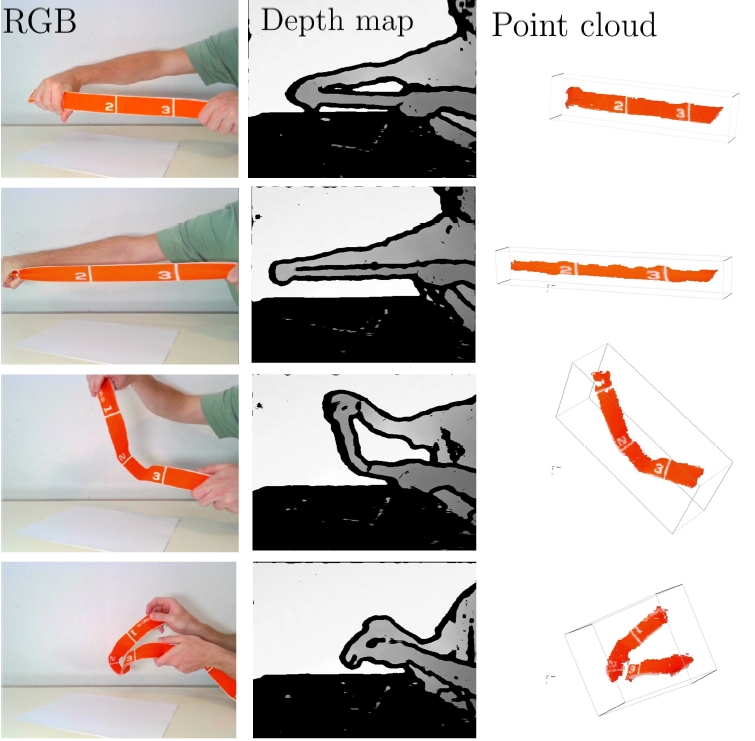

Title: RGB-D Sensing of Challenging Deformable Objects

Authors: Ignacio Cuiral-Zueco and Gonzalo Lopez-Nicolas

Workshop: Workshop on Managing deformation: A step towards higher robot autonomy (MaDef), 25 October – 25 December, 2020

Abstract: The problem of deformable object tracking is prominent in recent robot shape-manipulation research. Additionally, texture-less objects that undergo large deformations and movements lead to difficult scenarios. Three RGB-D sequences of different challenging scenarios are processed in order to evaluate the robustness and versatility of a deformable object tracking method. Everyday objects of different complex characteristics are manipulated and tracked. The tracking system, pushed out the comfort zone, performs satisfactorily.

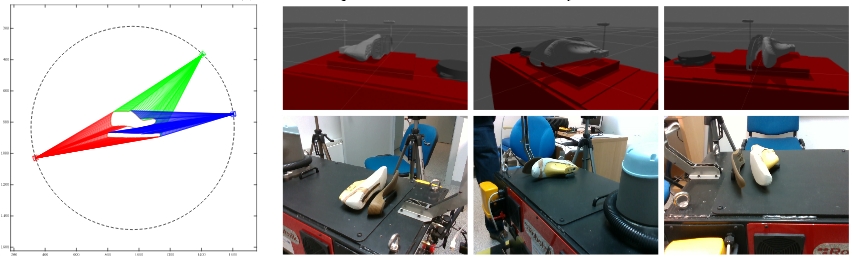

Title: Experimental multi-camera setup for perception of dynamic objects

Authors: Rafael Herguedas, Gonzalo Lopez-Nicolas and Carlos Sagues

Workshop: Robotic Manipulation of Deformable Objects (ROMADO), 25 October – 25 December, 2020

Abstract: Currently, perception and manipulation of dynamic objects represent an open research problem. In this paper, we show a proof of concept of a multi-camera robotic setup which is intended to perform coverage of dynamic objects. The system includes a set of RGB-D cameras, which are positioned and oriented to cover the object’s contour as required in terms of visibility. An algorithm of a previous study allows us to minimize and configure the cameras so that collisions and occlusions are avoided. We test the validity of the platform with the Robot Operating System (ROS) in simulations with the software Gazebo and in real experiments with Intel RealSense modules.