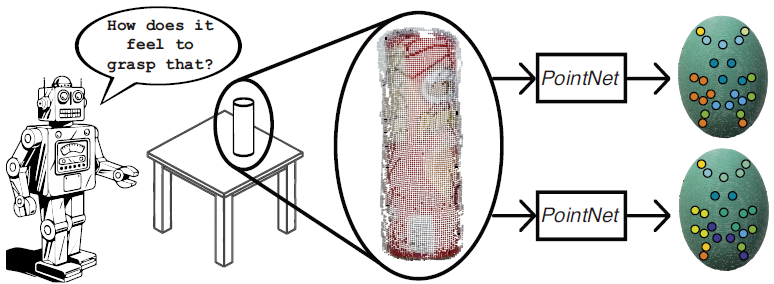

Title: Generation of tactile data from 3D vision and target robotic grasps

Autor: B.S. Zapata-Impata, P. Gil, Y. Mezouar, F. Torres

Journal: IEEE Transactions on Haptics, July 2020

Abstract:Tactile perception is a rich source of information for robotic grasping: it allows a robot to identify a grasped object and assess the stability of a grasp, among other things. However, the tactile sensor must come into contact with the target object in order to produce readings. As a result, tactile data can only be attained if a real contact is made. We propose to overcome this restriction by employing a method that models the behaviour of a tactile sensor using 3D vision and grasp information as a stimulus. Our system regresses the quantified tactile response that would be experienced if this grasp were performed on the object. We experiment with 16 items and 4 tactile data modalities to show that our proposal learns this task with low error.

Paper at IEEE